Virtual development of interactive sound design with real vehicle data

Audiokinetic + RemotiveLabs: A new collaboration that lets sound designers create interactive and immersive automotive audio experiences directly from real vehicle data instead of relying on manual controls or incomplete and inaccurate simulations. This enables rapid and efficient design of highly customizable and personalizable audio UX that needs little additional tuning when integrated in real vehicle production environments. Featuring insights from François Thibault, Head of Automotive Engineering at Audiokinetic.

Virtual development of interactive sound design with real vehicle data

Audiokinetic + RemotiveLabs: A new collaboration that lets sound designers create interactive and immersive automotive audio experiences directly from real vehicle data instead of relying on manual controls or incomplete and inaccurate simulations. This enables rapid and efficient design of highly customizable and personalizable audio UX that needs little additional tuning when integrated in real vehicle production environments. Featuring insights from François Thibault, Head of Automotive Engineering at Audiokinetic.

The challenges in vehicle sound signal integration

As EVs become quieter, audio teams must create a new sound language for feedback and comfort that reflects brand identity while meeting regulations and high customer expectations. These projects often involve multiple internal teams and external suppliers, adding complexity that demands better tools for efficient collaboration.

Traditionally, everyone relies on a few scarce and expensive prototypes, creating a slow, siloed workflow where teams wait for vehicle access to test and integrate their work. Shipping delays, scheduling conflicts, and limited hands-on time hinder iteration, drive up costs, and reduce audio quality. Even once these challenges are solved for one vehicle, the workflow must still scale across models and regions, each with unique design requirements.

“With the proper tools and workflow, access to prototype vehicles is no longer something that should limit large and distributed teams to innovate in terms of audio user experience and should drastically speed up the transition by closing the gap between R&D and production.” — François Thibault, Head of Automotive Engineering at Audiokinetic.

A new, flexible integration: adopt signal-driven sound design

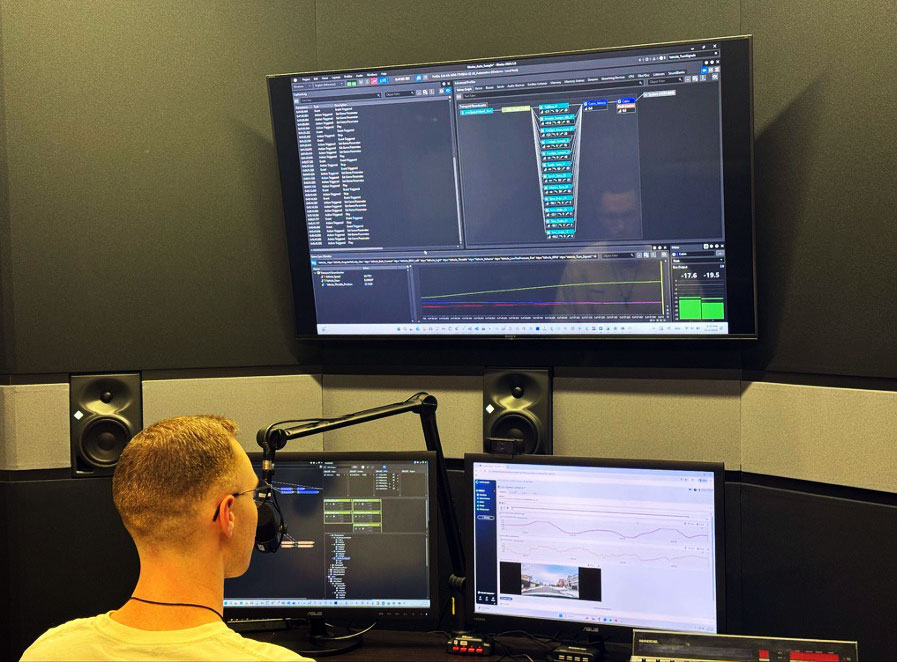

Audiokinetic has long focused on giving sound designers the freedom to create independently. By connecting Wwise with a platform for working with vehicle signals, playback and adaptations, across conditions and use cases enables a workflow that extends seamlessly from idea to series production. Whether designing simple chimes, synthetic engine sounds for comfort or pedestrian awareness, 3D audio alerts for navigation or autonomous driving, or immersive ride-sonification tied to driving context, Wwise delivers the interactive audio capabilities needed.

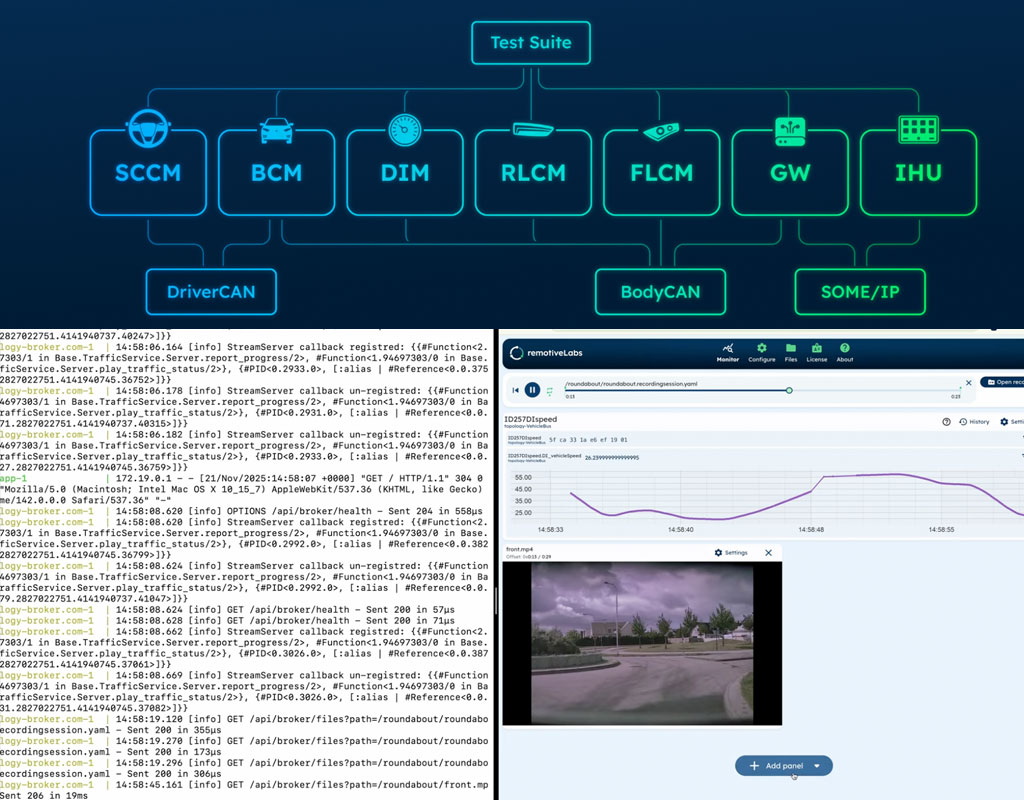

Interactive sound design depends on understanding what the vehicle is doing in real time. Through its integration with RemotiveLabs, Wwise can now use authentic vehicle data at any stage - whether from cloud-stored recordings or a virtually controllable car running on a true automotive network.

Audio-design requirements addressed by Audiokinetic and RemotiveLabs

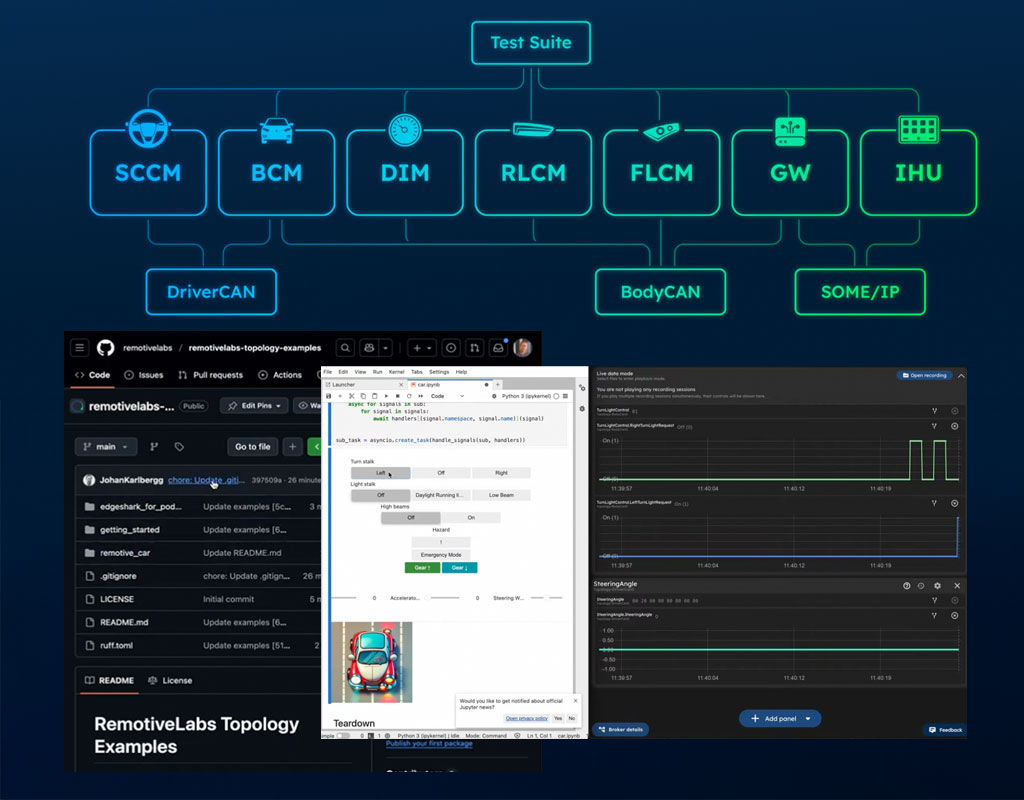

- Different vehicles speak different “data languages.” RemotiveLabs’ signal transformation makes it easy to standardize signals across vehicle models, adapt ranges, and even create virtual signals tailored to audio-triggering needs.

- It’s hard to identify the right vehicle signals for sound control. Rich data visualization helps sound designers quickly find the most relevant signals when working with new vehicles and fresh data captures.

- Testing sound designs in the right context is complex. By simply loading another recording and configuration, designers can experience how changes behave across vehicles, environments, and driving scenarios.

- Sharing sound concepts for feedback takes too much effort. With RemotiveLabs’ browser-based platform, designers can easily share audio prototypes with management, run surveys, or conduct focus-group evaluations in a controlled environment.

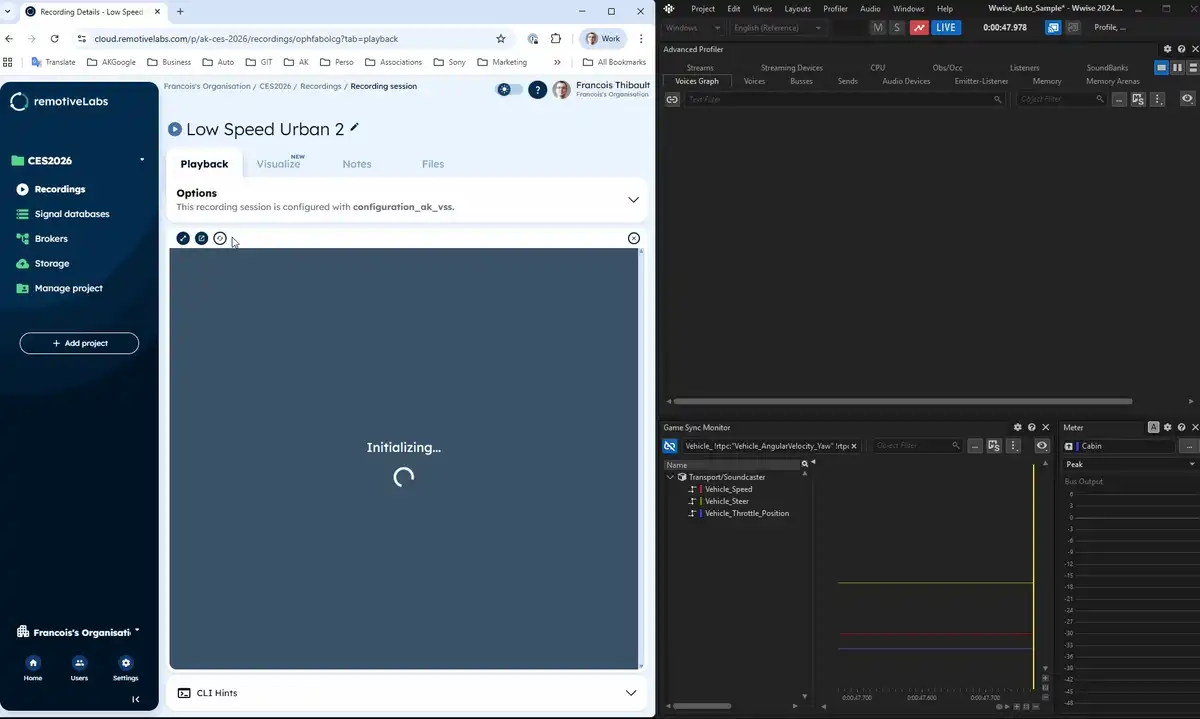

With simulation data readily available, Wwise’s real-time editing and profiling tools become even more powerful - making it easy to tune designs in context, run realistic performance benchmarks, and get results that closely mirror in-vehicle behavior. Hard-to-reproduce conditions can be analyzed by running long vehicle captures, using RemotiveLabs to pinpoint and annotate key moments, and then inspecting exactly what happened in the Wwise audio pipeline at any specific time.

Two ways to unlock audio creativity with a virtualized vehicle

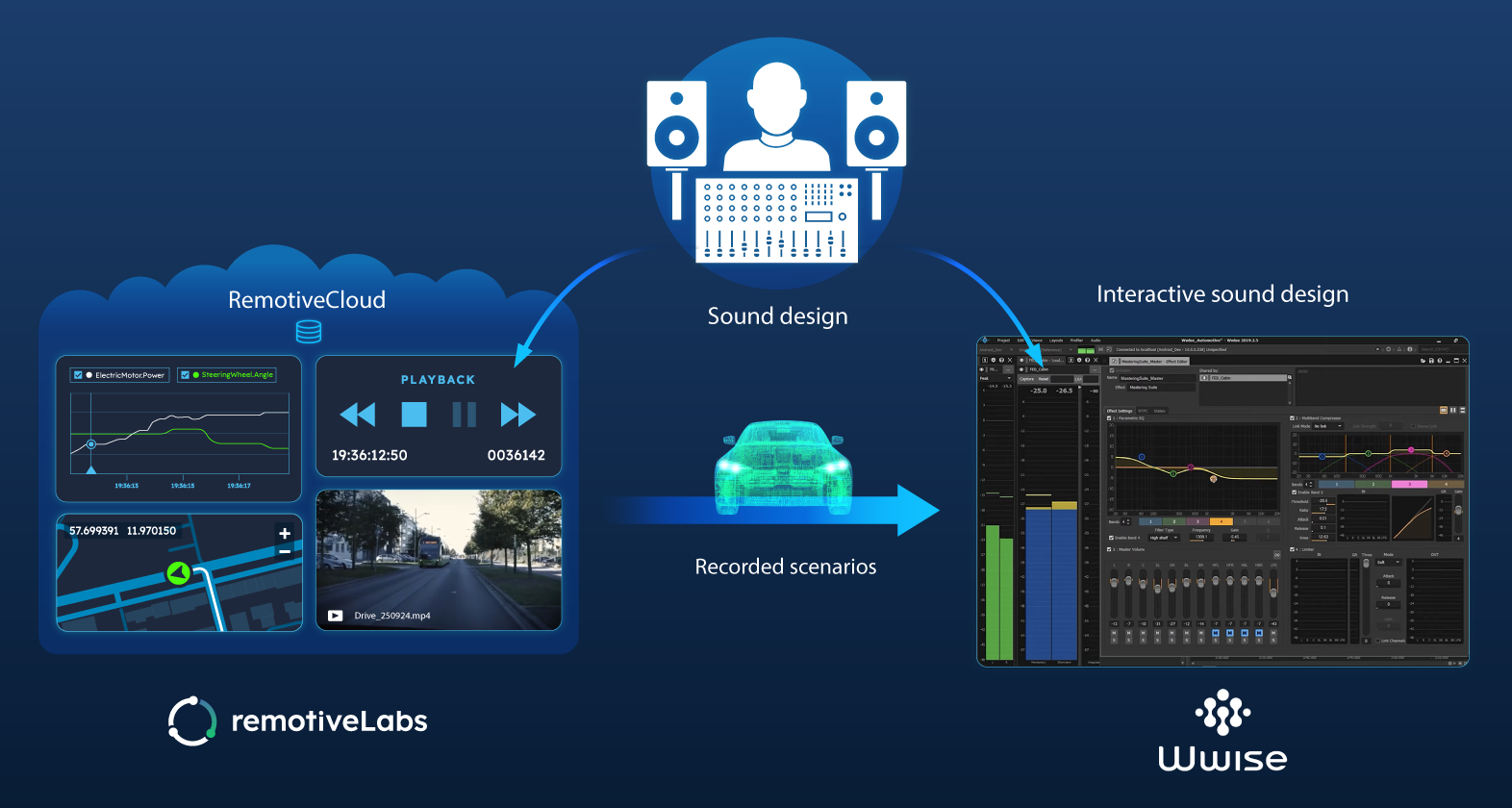

Cloud-powered with RemotiveCloud: Replay real-world recorded scenarios for regression, comparison, and CI/CD workflows, allowing designers to test audio behavior against a shared library of everyday and edge-case recordings long before a prototype exists. This keeps sound design consistent across iterations while making the process repeatable and fully automatable throughout development.

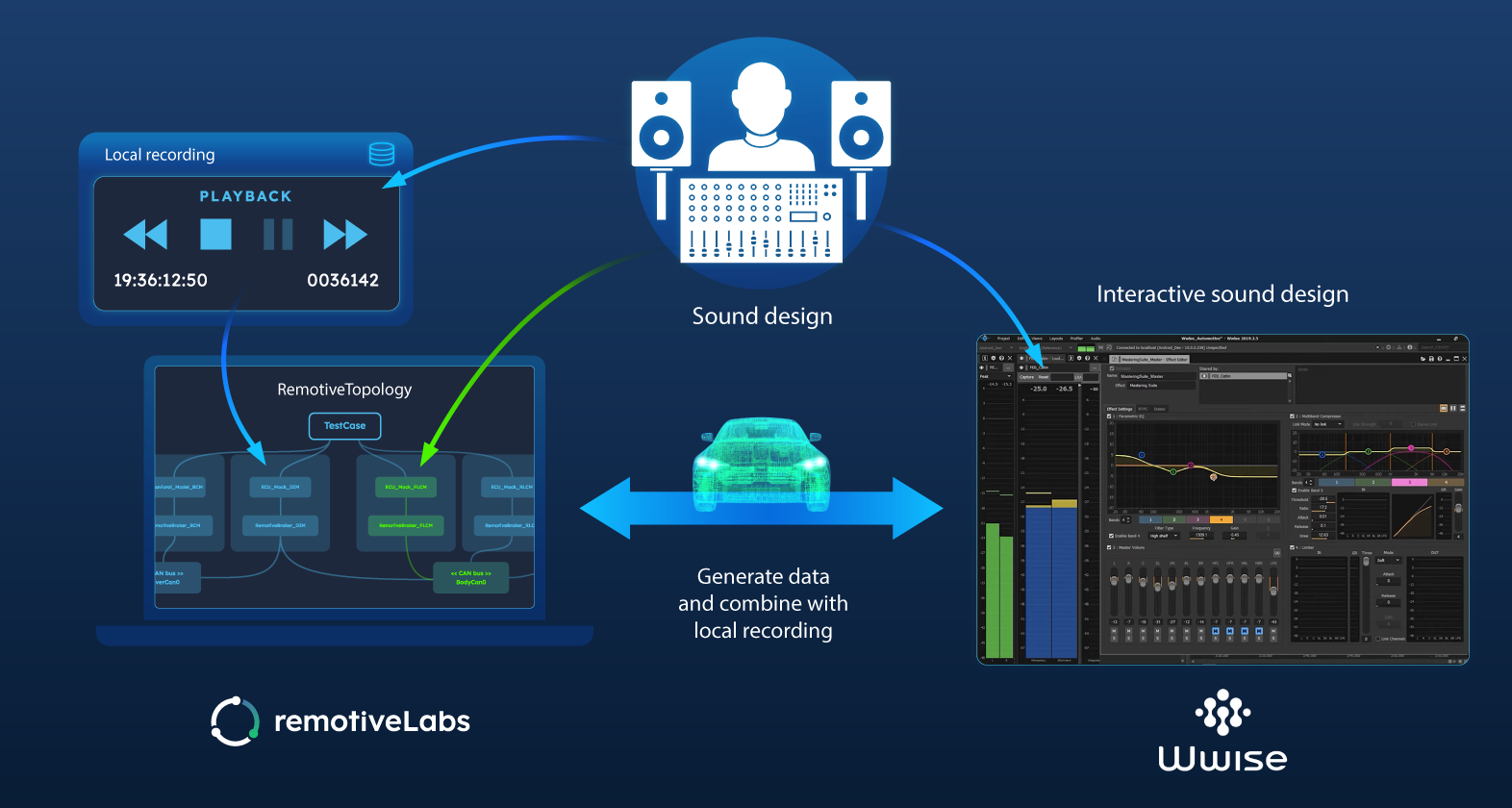

Local-first with RemotiveTopology: Run a virtual car locally using RemotiveTopology on your laptop to prototype behaviors in the studio and deploy the same logic seamlessly to Android/Linux IVI or perhaps an instrument cluster or centralized computation partition later. In this closed-loop environment, designers can inject signals (CAN, SOME/IP, GPS) to create custom scenarios and combine them with recorded data for parity across platforms. It’s ideal for edge-case generation and rapid iteration without waiting for hardware availability. The ability to run everything locally facilitates studio work and offline demos.

Unlocking new vehicle audio design use cases

This collaboration is a game-changer for automotive interactive audio design - removing friction while enabling relevance and speed to new strategic areas of sound innovation.

- Synthetic engine sound: Pull from a library of pre-recorded vehicles and situations to quickly present to a stakeholder different sound design iterations to provide the perfect sound signature for a vehicle.

- 3D audio alerts: Inject/generate rare and difficult to replicate conditions data effortlessly. Perfect spatial cues that build driver trust without waiting for track testing.

- In-car gaming and new media experiences: Correlate maps, motion, visuals, and audio to craft in-car gaming or ride sonification. Align sensory cues to reduce motion sickness and create new passenger experiences.

From prototyping and into production

The integration supports teams from early R&D all the way to SOP and beyond:

- CI-ready workflows: Store and replay recordings in CI/CD pipelines.

- Regression-proof audio: Automatically test sound design on every build.

- No wasted effort: The same assets flow from ideation → prototype → production.

- Variant coverage: Scale globally by checking trims and regions automatically.

Check out the latest from us

Join the automotive rebels that #getstuffdone with RemotiveLabs!