Why automotive testing is broken - and how to fix it

Insights from Robert Fey, Synopsys: With more than 15 years in automotive test and verification, Robert “Mr. Broken Testing” Fey has seen firsthand why modern vehicle software is so complex to test - and what the consequences are when teams can’t keep pace. His proposal? Shift focus to behavioural intent: a clear, shared description of expected system behaviour that stays stable even when code, architecture, or organisation around it changes.

Why automotive testing is broken - and how to fix it

Insights from Robert Fey, Synopsys: With more than 15 years in automotive test and verification, Robert “Mr. Broken Testing” Fey has seen firsthand why modern vehicle software is so complex to test - and what the consequences are when teams can’t keep pace. His proposal? Shift focus to behavioural intent: a clear, shared description of expected system behaviour that stays stable even when code, architecture, or organisation around it changes.

The hidden forces behind broken automotive testing

In this interview, automotive testing expert Robert Fey explains what makes automotive software fundamentally different, why testing so often breaks down, and how a behaviour-driven approach can unlock faster and more reliable development for software-defined vehicles.

How would you describe the broken testing pattern in automotive?

Big-bang integration!

Imagine you’re making a software change - a feature, a fix, an updated function. You run some basic checks. Meanwhile, your team of 10–20 people is doing the similar thing, each committing multiple changes per day. But there is rarely a stepwise, staged path through the product. Maybe there’s a CI job or a nightly build, but automotive development still revolves around large milestones. Everyone waits for “the integration phase”, where all components finally come together, often weeks or months later. The outcome is predictable: huge debugging efforts, and no one remembers what they changed during that period. Imagine making a change today and only seeing the effect 20 weeks later on a HIL rig. I personally have no clue what I changed 20 weeks ago. Multiply this by the number of developers working without shared transparency and the system becomes unmanageable.

What makes automotive software so difficult to test?

Most problems are related to software complexity. Everyone is aware they shouldn’t do big bang integrations, they know they should start small, then increase - but they are not doing it. The complexity isn’t accidental; it’s structural. Think of the number of people you need to get a good overview of what you want to test. Take Volkswagen as an example, they had some 50,000 people in development, working with Tier 1/2 suppliers, multiple ECUs, environments, and I wouldn’t be surprised if it is half a million people actually working on a single car model to bring it to life. And it keeps getting more complicated with the number of ECUs being consolidated and everything is increasingly interconnected, harder to observe, understand dependencies, and simulate the 1000 signals from the outside… The more interfaces, the higher the combinatory, which increases the number of things to keep in mind to build up testing. Requirements tend to overlap, be missing or be cross-functional - and are you testing a piece of software, a unit or an application?

Watch the full SDV Coffee Talk episode featuring Robert Fey, Dr. Fatih Tekin, and RemotiveLabs’ Emil Dautovic as they discuss integration challenges,“China speed,” and practical ways to improve SDV development.

How should teams approach testing in automotive to be successful?

According to Robert, the biggest pain point is maintaining tests - most tests break because abstraction and encapsulation are missing. It’s not about executing a single test, but aligning everyone on what should be tested and how components interact. For Robert, success starts with testing behaviour, not implementation. His four principles are:

- Describe the intent first: Clearly define what the feature should do - conditions, outputs, interactions. Intent stays stable even when code changes.

- Keep tests abstract and stimulus-independent: Don’t hard-wire tests to internal details; good tests survive architectural shifts.

- Automate stimulation, coverage, and expected behaviour: Let tools generate input scenarios, compute coverage, and derive expected outcomes to reduce maintenance.

- Ensure full traceability: Link requirement → situation → test → outcome so teams can see exactly what broke, why, and where.

Teams that follow this approach often see major productivity gains without adding new headcount. And as Robert notes, it’s not about the tooling - it’s about understanding testing and getting the maximum value out of the tools you use. This year, Synopsys launched ATEF (Automotive Test Efficiency Framework) - essentially Robert’s accumulated knowledge structured into a maturity model that helps teams identify gaps and grow their testing practice.

How will software-defined vehicles reshape testing?

Robert believes the industry is only at the beginning of a big shift. ECU-based requirement breakdowns no longer work when features live across software stacks, and when updates continue long after SOP, OEM–supplier relationships are changing too. From previously receiving a complete software package at fixed milestones, OEMs increasingly need continuous and incremental maturity - supported by virtual ECUs, hybrid test environments, and toolchains that interoperate. Tooling providers in automotive need to ensure their tooling comes together. OEMs need to rely on the tools they use to function together every day without having to spend time verifying between tools.

[nfobox]

Fact box:

Robert Fey Manager for Synopsys ’Time Partition Testing (TPT) solution. 15+ years in automotive test and verification (VW Group / CARIAD / Carmeq). Specialises in behaviour-driven testing, integration quality, virtual ECU validation, and reducing dependenceon late-stage, hardware-bound testing.

Synopsys capabilities Beyond TPT, Synopsys offers Synopsys Silver virtual ECUs - enabling high-fidelity, software-first validation of production code in simulated environments. Silver is a key enabler for early functional testing and incremental maturity.

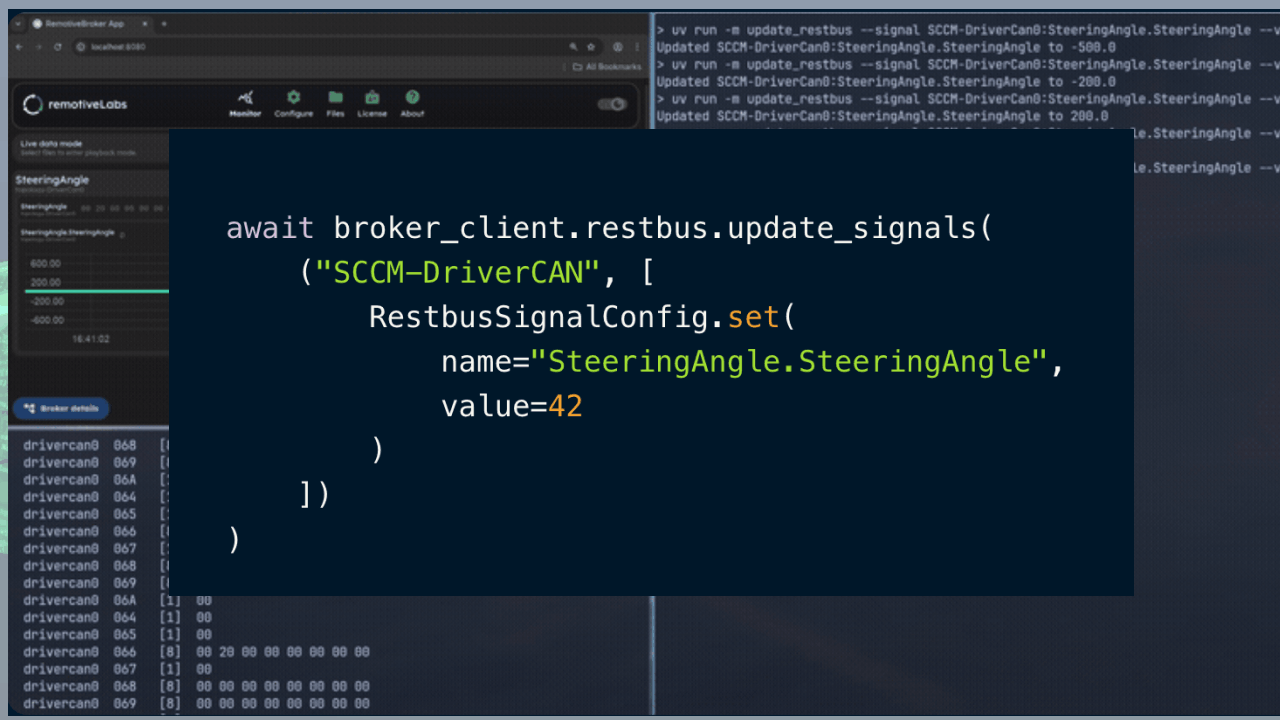

Connection to RemotiveLabs Synopsys Silver integrates/runs with RemotiveTopology, combining high-fidelity vECUs with mocks, restbus simulations, and flexible system-level setups. The combination was initiated by an OEM request and allows the team to detect integration issues early, shorten feedback loops, and mature software long before hardware is available.

[/nfobox]

Check out the latest from us

Join the automotive rebels that #getstuffdone with RemotiveLabs!